When it comes to big data processing, Spark SQL has become a game-changer for data engineers and analysts. Creating tables in Spark SQL is one of the fundamental skills you need to master if you're diving into the world of distributed data processing. This guide will take you through everything you need to know about Spark SQL create table syntax, from basic commands to advanced techniques. Whether you're a beginner or an experienced pro, there's something here for everyone.

Let's face it, managing large datasets can be overwhelming. But with Spark SQL, you have a powerful tool that simplifies data manipulation and storage. The "CREATE TABLE" command is like the backbone of your data infrastructure. It’s the foundation where all your data transformations begin. This article will break down the syntax step by step, so you can start building tables like a pro.

Before we dive in, it's important to understand why Spark SQL stands out. Unlike traditional SQL databases, Spark SQL can handle massive datasets across clusters, making it perfect for modern data processing needs. So, whether you're working with structured, semi-structured, or unstructured data, Spark SQL has got your back. Now, let's get started!

Read also:Venus Williams Husband A Comprehensive Look At Her Personal Life And Love Story

Understanding Spark SQL Create Table Basics

What is Spark SQL?

First things first, Spark SQL is essentially the module in Apache Spark that allows you to run SQL queries on distributed data. It integrates seamlessly with other Spark components, giving you the ability to process data at scale. Now, when it comes to creating tables, Spark SQL offers a variety of options depending on your use case.

Here’s a quick rundown of what you can do with Spark SQL:

- Create permanent or temporary tables

- Define schemas for your data

- Specify storage formats like Parquet, ORC, or CSV

- Partition and bucket your data for better performance

So, if you're dealing with massive datasets and need a way to organize them efficiently, Spark SQL create table syntax is your best friend.

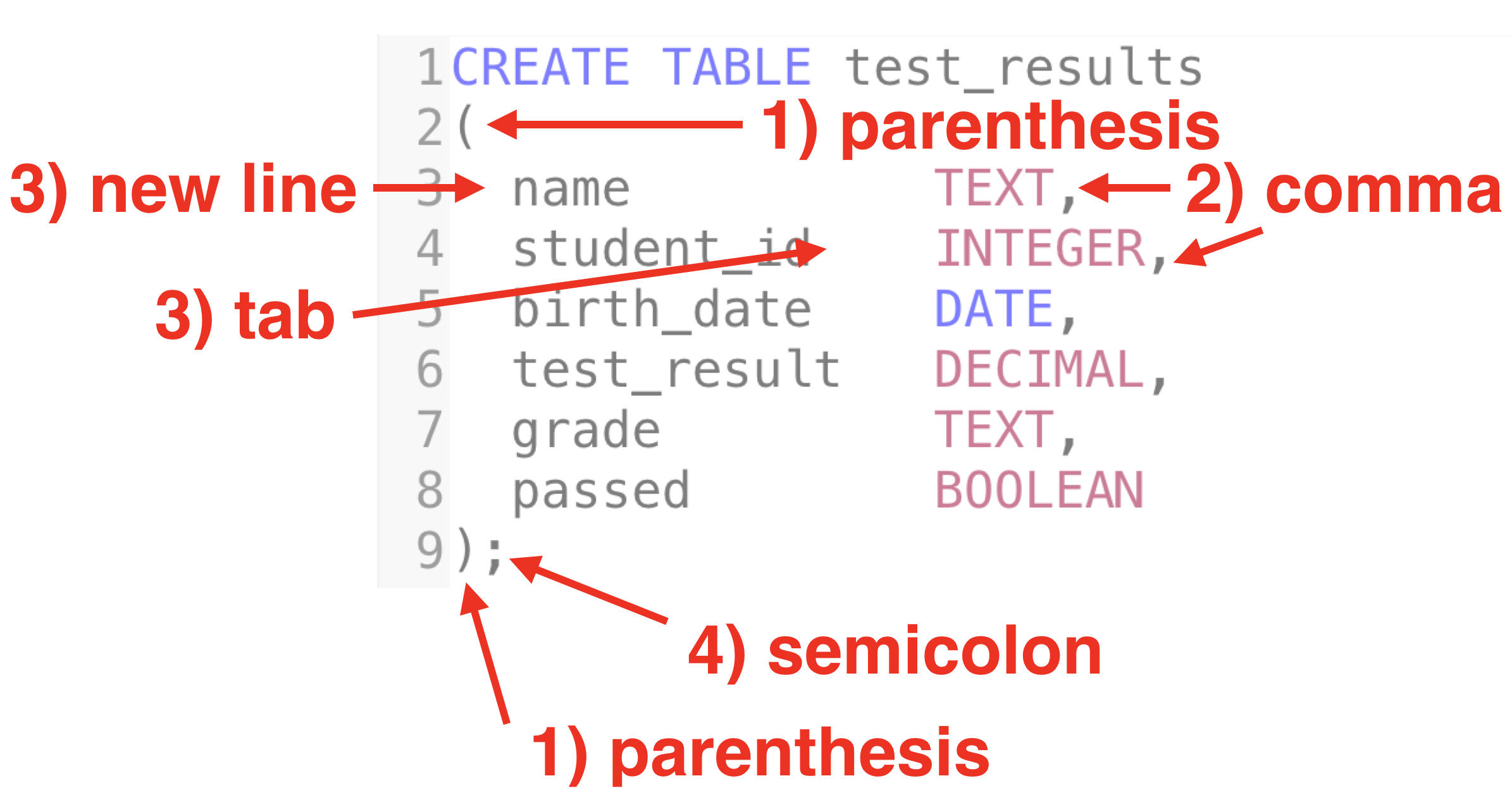

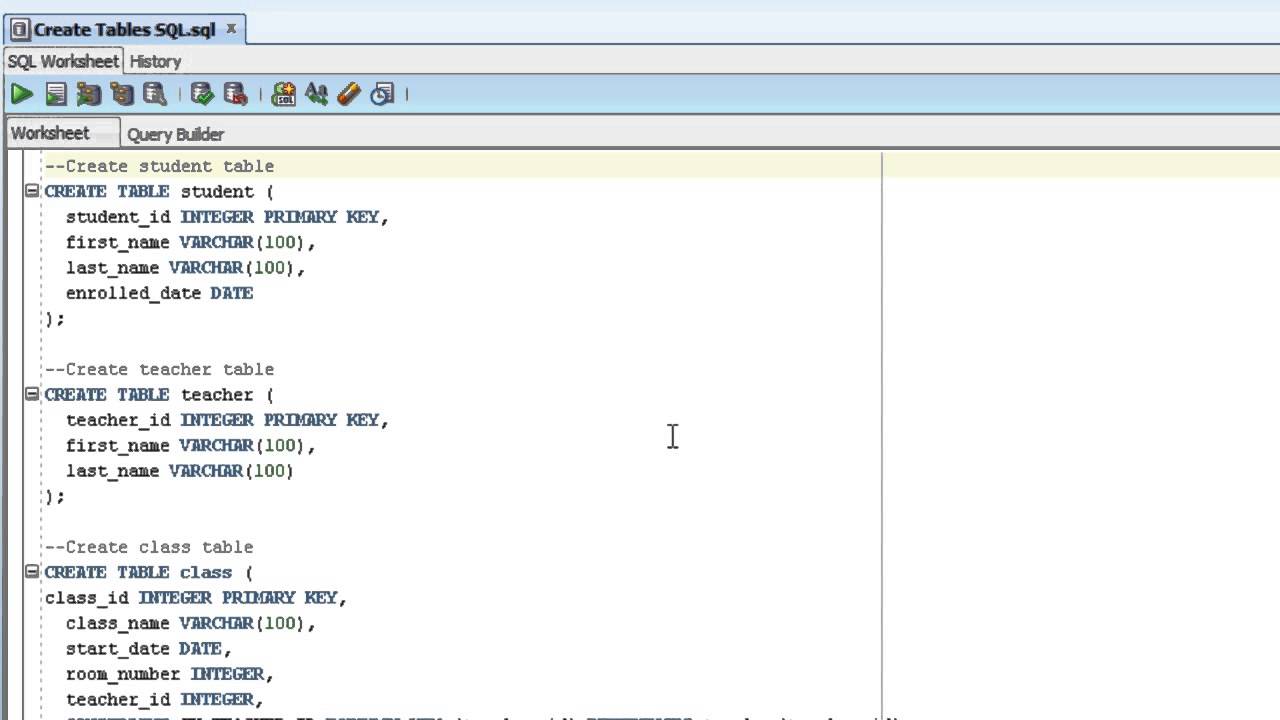

Basic Syntax of CREATE TABLE

Let's take a look at the basic syntax for creating a table in Spark SQL:

CREATE TABLE table_name (column_name data_type, ...);

Simple, right? But there’s more to it. You can also specify additional parameters like storage format, partitioning, and bucketing. For example:

Read also:Matt Czuchrys Wife Meet The Stunning Partner

CREATE TABLE IF NOT EXISTS employees (id INT, name STRING, salary FLOAT) USING PARQUET;

This command creates a table named "employees" with three columns: id, name, and salary. The "USING PARQUET" clause specifies that the data will be stored in Parquet format, which is optimized for performance.

Advanced Features of Spark SQL Create Table

Partitioning Your Data

Partitioning is a technique used to divide your data into smaller, more manageable chunks. This can significantly improve query performance, especially when working with large datasets. In Spark SQL, you can partition your tables by specifying the "PARTITIONED BY" clause.

Here’s an example:

CREATE TABLE sales (id INT, product STRING, amount FLOAT) USING PARQUET PARTITIONED BY (region STRING);

In this case, the "sales" table is partitioned by the "region" column. This means that data will be stored in separate directories for each region, making it easier and faster to query specific regions.

Bucketing for Better Performance

Bucketing is another technique that can boost query performance. It involves grouping data into a fixed number of buckets based on a specific column. This can help reduce shuffle operations during joins, leading to faster query execution.

Here’s how you can create a bucketed table:

CREATE TABLE users (id INT, name STRING, age INT) USING PARQUET CLUSTERED BY (id) INTO 10 BUCKETS;

In this example, the "users" table is bucketed by the "id" column into 10 buckets. This setup can be particularly useful when performing joins on the "id" column.

Temporary vs. Permanent Tables

Temporary Tables

Temporary tables are great for short-lived data that you only need during a specific session. They are automatically dropped when the session ends, so you don’t have to worry about cleaning them up.

Here’s how you can create a temporary table:

CREATE TEMPORARY VIEW temp_table AS SELECT * FROM source_data;

This command creates a temporary view named "temp_table" from the "source_data" table. Temporary views are session-specific and cannot be accessed from other sessions.

Permanent Tables

Permanent tables, on the other hand, persist even after the session ends. They are stored in a metastore and can be accessed by multiple sessions. To create a permanent table, simply omit the "TEMPORARY" keyword:

CREATE TABLE permanent_table (id INT, name STRING) USING PARQUET;

This command creates a permanent table named "permanent_table" with two columns: id and name. The table will remain in the metastore until it is explicitly dropped.

Storage Formats in Spark SQL

Why Storage Format Matters

The storage format you choose can have a significant impact on performance and storage efficiency. Spark SQL supports several storage formats, each with its own strengths and weaknesses. Here are some of the most commonly used formats:

- Parquet: A columnar storage format that is highly optimized for performance.

- ORC: Another columnar format that offers similar performance benefits.

- CSV: A simple text-based format that is easy to read and write but less efficient.

- JSON: A flexible format that is great for semi-structured data.

When choosing a storage format, consider factors like query performance, storage space, and compatibility with your data processing pipeline.

Specifying Storage Format

To specify a storage format in your CREATE TABLE statement, use the "USING" clause. For example:

CREATE TABLE logs (timestamp TIMESTAMP, message STRING) USING ORC;

This command creates a table named "logs" with two columns: timestamp and message. The data will be stored in ORC format, which is optimized for read-heavy workloads.

Best Practices for Spark SQL Create Table

Optimizing Table Design

Designing your tables efficiently can lead to significant performance improvements. Here are some best practices to keep in mind:

- Choose the right storage format: Use Parquet or ORC for performance-critical applications.

- Partition wisely: Partition your data based on frequently queried columns.

- Bucket when necessary: Use bucketing for columns involved in frequent joins.

- Define proper schemas: Ensure your column definitions match the actual data types.

By following these practices, you can ensure that your tables are optimized for both performance and storage efficiency.

Avoiding Common Pitfalls

While Spark SQL is a powerful tool, there are some common pitfalls to watch out for:

- Over-partitioning: Creating too many partitions can lead to small files, which can degrade performance.

- Incorrect data types: Using the wrong data types can lead to inefficient storage and slower queries.

- Ignoring storage formats: Choosing the wrong storage format can result in suboptimal performance.

Being aware of these pitfalls can help you avoid common mistakes and build more efficient tables.

Real-World Examples of Spark SQL Create Table

Example 1: Creating a Simple Table

Let’s say you have a dataset of customer orders and you want to create a table to store it. Here’s how you can do it:

CREATE TABLE orders (order_id INT, customer_id INT, product STRING, quantity INT) USING PARQUET;

This command creates a table named "orders" with four columns: order_id, customer_id, product, and quantity. The data will be stored in Parquet format for optimal performance.

Example 2: Creating a Partitioned Table

Now, let’s say you want to partition the "orders" table by the "customer_id" column. Here’s how you can modify the command:

CREATE TABLE orders (order_id INT, product STRING, quantity INT) USING PARQUET PARTITIONED BY (customer_id INT);

This setup allows you to query specific customers more efficiently by reducing the amount of data scanned during queries.

Data Sources and Integration

Connecting Spark SQL to External Data Sources

Spark SQL can connect to a variety of external data sources, including HDFS, Amazon S3, and relational databases. To create a table from an external source, you can use the "LOCATION" clause to specify the data location.

Here’s an example:

CREATE TABLE external_data (id INT, value STRING) USING PARQUET LOCATION 's3://bucket-name/path/to/data';

This command creates a table named "external_data" from data stored in an S3 bucket. The data will remain in its original location, and Spark SQL will read it on-the-fly.

Integrating with Hive Metastore

Spark SQL can also integrate with Hive metastore, allowing you to leverage existing Hive tables and metadata. To enable this integration, simply configure Spark to use the Hive metastore.

Once configured, you can create tables in Spark SQL that are automatically registered in the Hive metastore. This makes it easy to share data and metadata across different tools and platforms.

Troubleshooting Common Issues

Handling Schema Mismatches

One common issue when creating tables is schema mismatches. This happens when the schema defined in the CREATE TABLE statement doesn’t match the actual data. To avoid this, always validate your data before creating the table.

Here’s a tip: Use the "DESCRIBE" command to inspect the schema of an existing table or data source:

DESCRIBE TABLE source_data;

This will give you a clear view of the column names and data types, helping you avoid schema mismatches.

Resolving Performance Issues

If you’re experiencing slow query performance, consider the following:

- Check your partitioning: Are your partitions too fine-grained or too coarse?

- Review your storage format: Are you using the most efficient format for your workload?

- Optimize your queries: Are your queries written in a way that minimizes unnecessary computations?

By addressing these issues, you can often resolve performance bottlenecks and improve query execution times.

Conclusion: Mastering Spark SQL Create Table Syntax

In conclusion, mastering Spark SQL create table syntax is essential for anyone working with big data. From basic table creation to advanced features like partitioning and bucketing, Spark SQL offers a wide range of tools to help you organize and process your data efficiently.

Remember to choose the right storage format, partition your data wisely, and follow best practices to ensure optimal performance. And don’t forget to validate your schemas and troubleshoot any issues that may arise.

If you found this guide helpful, feel free to leave a comment or share it with your colleagues. And if you’re looking for more tips and tricks on Spark SQL, be sure to check out our other articles. Happy coding!

Here’s a quick recap of what we’ve covered:

- Basic and advanced syntax for creating tables in Spark SQL

- Partitioning and bucketing techniques for improved performance

- Choosing the right storage format for your data

- Best practices and common pitfalls to avoid

- Real-world examples and troubleshooting tips

Now go ahead and start building those tables like a pro!

Table of Contents